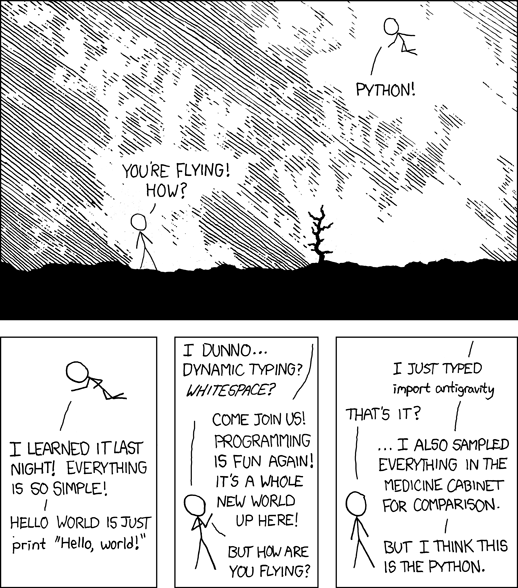

Python

Python 101

Getting error when running pip search, like this?

pip search yaml

ERROR: XMLRPC request failed [code: -32500]

RuntimeError: PyPI's XMLRPC API is currently disabled due to unmanageable load and will be deprecated in the near future. See https://status.python.org/ for more information.

It is a real tragedy. pip search is banned cuz of abuse. so can only do web based search at

pypi.org.

For for info and alternatives, see

dev2qa

Note that pip install -r requirements.txt would still work if the package is listed with the correct name(s).

- Python, a programming language, where space matters!! With that, files portability between Windows and Unix becomes a huge problem, due to the use of CR/LF vs LF in these platforms.

- Python3 is a new language that supersedes Python 2, it adds additional construct, so program written in Python3 won't necessarily run in Python 2.x

- ; is largely uneeded. lines ends with LF. ; can be used to separate commands when trying to write one-liner in the interactive shell.

-

- Anaconda: a distribution of python, managed core modules and some 195 libraries. It is especially useful for getting Python in Windows and mac.

- It also has a package management (conda, ppt)

- Conda Environent provides a virtual env that indlues isolation of dependent binaries. it has a way to provide for system-level environment, which base python's virtualenv can't. for setup, see

http://uoa-eresearch.github.io/eresearch-cookbook/recipe/2014/11/20/conda/

- many libraries are in conda (hosted by continuum), for things not in conda, can still run pip (which come with anaconda) to install such library.

- (Pypi is the equivalent of CPAN? EzInstall??)

- Miniconda is a lightweight version of anaconda. don't come with the huge library of anaconda, let user to pull only whatever that is needed.

- Pypi - Python Package Index, intended to be comprehensive catalog of all open source pyton packages.

- pip - pypi install packager?? as of 2015, mostly just use pip

- iPython: interactive shell for python (and other lang now).

pip install ipython

pip install ipython[notebook]

ref: http://ipython.org/install.html

- Jupyther/iPython notebook: This allow writting text interspearsed into python code.

good for testing ideas, data crunching and visualization type of project.

Anaconda comes with this, and typically run the server at

http://localhost:8888

Ref: nbviewer

And this OUseful blog describes 7 ways of running iPython notebooks. I particularly liked CoLaboratory implementation on Google Drive.

Authorea support for iPhython notebook (as part of its web authoring platform) on the cloud was pretty neat too.

- No pointers?? see below

- Python2 FAQ is a good read once beyond the syntahtic sugar and need to know more internals in a real programming project.

Tools from Python Libraries

- python -m json.tool myfile.json # check a syntax of json file, if correct, spill json out to stdout. if err, will complain.

- python -c 'import crypt; print(crypt.crypt("My Password", "$6$My Salt"))' # create SHA512 encrypted password

- python -m trace --trace myPythonCode.py # print each command before it runs, like bash -x script.bash

- numpy - basic numerical analysis

- scipy - build on numpy, more data analysis for scientific applications.

- pandas - kinda provide an equiv of R. Work with data frames. See

#dataframe below

Python idiosyncracies

If running a .py script and get an error of " : File not found", check to ensure that the python script does not end with DOS ^M characters. If needed,

cat old_script.py | dos2unix > new_script.py

and run the new script. It is a weired error, and I thought most program can handle ^M these days...

#!/usr/bin/env python

many python script starts as that. It effectively look at user's Environment variable and find out what (where) python is defined and run it as the interpreter.

Calling #!/home/username/bin/python may not always work, as PYTHONLIB won't be setup (unless done in the code).

Python can both be interpreted or compiled into byte-code.

Typically .pyc is produced on execution now, so first run incur a JIT compilation delay.

Python 2 vs Python 3

Things to watch out for to write code that is more portable between python2 and python3

avoid has_key() in python2.

ie, avoid dict.has_key(k)

instead use k in dict.iterkeys()

or simply

k in dict

which works in both python2/3

use print(x) rather than print x, the latter does not work in python 3.

float division: eg, 27/3, in python3 will automatically floating division, python2 will assume int unless use:

from __future__ import division

or

27/float(3)

python3 can use _ as thousand separators in numbers (instead of comma),

and it doesn't have to be group of 3, it is for human reading and stripped by the interpreter.

Also note that it is *NOT* a "decimal point" like cents and dollars when only two digits is in a group.

(Why is Spanish and some other lang reverse role of comma and period?)

eg:

>>> print( 5_000_111_000_222_021 - 4_20010 )

5000110999802011

>>> print( 5_4321 + 100_00 )

64321

>>>

Distributions of Python

- python.org. avail for Windows, Linux, ...

- anaconda, by continuum.io. Win, OS X, Linux. Free for commercial use too. Include 300 popular packages. Additional packages can be installed via pip (eg awscli).

- Python 2.7 will be installed with Anaconda 2. Can be installed as user. Will setup PATH environment automatically.

- Include iPython (run in DOS command prompt)

- Jupyter (launch a web interface).

- Jipyter Qt run in a unix terminal-like environment. Much better than the iPython (in DOS) env.

- No easy way to run pip. Need to use "Anaconda Prompt", which is DOS. So hard to run python packages like aws cli. May still want to use cygwin to provide a good terminal (at which point, may just want to use the cygwin python/pip).

- Python 3.5 also avail.

- ActiveState (mostly for windows? personally try to avoid, even though nothing wrong with it really, just kinda non standard).

Environment

PYTHONPATH - module search path

sys.path - this should be system path, but https://docs.python.org/2/tutorial/modules.html#the-module-search-path made sound python modules will be searched in this path... (cuz they are essentially python programs?)

PYTHONSTARTUP - interactive startup file (commands listed here would be run as if they were typed in interactive shell).

# one good way to use the environment's PATH, but if not set, can at least have some default.

# https://districtdatalabs.silvrback.com/getting-started-with-spark-in-python

import os

import sys

if 'SPARK_HOME' not in os.environ:

os.environ['SPARK_HOME'] = '/srv/spark'

SPARK_HOME = os.environ['SPARK_HOME']

sys.path.insert(1, os.path.join( SPARK_HOME, "python", "build"))

if 'PYTHONPATH' not in os.environ:

os.environ['PYTHONPATH'] = "/home/system_web/local_python_2.7.9/lib/python2.7/site-packages/"

PYTHONPATH = os.environ['PYTHONPATH']

sys.path.insert(1, os.path.join(PYTHONPATH, "/home/system_web/local_python_2.7.9/lib/python2.7/site-packages/", "/opt/python/2.7.9/lib/python2.7/site-packages/"))

## not sure if insert will check for duplicates...

## it will certainly complain if it doesn't exist.

# run linux os command from inside python:

import os

os.system( 'echo $PYTHONPATH' ) # this can run os command, but won't get the env var settings

os.system( 'env | grep PYTHON' )

Setting up the program on NixOS

System-wide, edit

/etc/nixos/configuration.nix

environment.systemPackages = with pkgs; [

python27Packages.ipython # does NOT provide python

python34Packages.ipython

python27Full # ipython does NOT depends on this package

]

As user:

nix-env -i python-2.6.9

Setting up the program on Windows

http://www.howtogeek.com/197947/how-to-install-python-on-windows/ has a step-by-step install, including how to setup environment variables.

https://www.continuum.io/downloads is download page for Anaconda, include version for winsows (and os x, linux).

conda

need to have anaconda or miniconda installed, which typically setup some env in .bashrc

then can do:

conda info --envs # my installed conda env available for activation

conda update -n base -c defaults conda # but fails in centralized software NFS mounted env ?

# conda create --name abricate

conda create --name epi

conda activate epi

conda install -c bioconda abricate

conda remove abricate

# tbd...

conda install -c conda-forge -c bioconda -c defaults mlstn

eg of adding channel to get more sw

conda config --add channels defaults

conda config --add channels conda-forge

conda config --add channels bioconda

PIP

PyPI = Python Package Index - equiv of CPAN.

Allow for installation of python library installation using "pip"

sudo yum install python-pip

-or- python setup.py install

pip install easybuild

pip list

pip show easybuild

pip uninstall enum

pip install enum34

Libraries are installed to /usr/lib/pythonN.N/site-packages/

List installed modules/librarys/packages

pip list

import pip

sorted(["%s==%s" % (i.key, i.version) for i in pip.get_installed_distributions()])

Ref:

http://stackoverflow.com/questions/739993/how-can-i-get-a-list-of-locally-installed-python-modules

Python Environment/Virtual Environment

(Contrast this with Continuum's Conda, which do this and some more)

python3.6 -m venv ./venv # create a virtual env (python 3.6)

source ./venv/bin/activate

pip install -r requirements.txt # list all pip install packages in a requirement file

## below are old school, obsolete way to create/invoke virtual env

pyvenv ~/local_python_3.4 # create a virtual env (python 3.4)

# in python 2.7, use virtualenv ~/local_python_2.7 instead

# create vir env once for each version of python being used

source ~/local_python_3.4/bin/activate # activate virt env (change path accordingly for diff version of python)

pip install scipy # install module into virtual env using pip (eg for installing scipy)

easy_install scipy # install module into virtual env using easy_install (alternate of pip, don't need to do both)

example requirements.txt

docker

#re

regex

shutils

argparse # version 1.1

#logging # version 0.5.1.2 # neither of these logging worked for pip 21.3.1 (python3.6, in 2022-007-10)

#logging==0.4.9.6

Python Module

Python libraries are provided as module, which can be imported. Python search for modules listed in the environment variable PYTHONPATH.

Types of modules:

- build-in modules. eg: import sys, os

- C library written as python extension. eg: from math import *; sin(5)

- a directory containing the specially created __init__.py file

- .py file. ie, don't need any special keyword to declare the file as module. just have list of functions def-ined in the file, and (typically) not actual actions when the .py file is "run".

- .pyc file. ie, byte-compiled version of a .py

- .pyo file. ie, -O optimized byte-compiled .py

- .pth file (as alternative to setting PYTHONPATH)

- .zip files can also be imported

when writting python modules, best if it does not output anything. else, when consumer do import module_foo it would essentially execute codes in that module and output things that may not be desirable.

https://docs.python.org/2/tutorial/modules.html#the-module-search-path has a good overview of modules:

__name__ contains the module itself

if __name__ == __main__ : # module is being executed directly, can place desired execution statement in here

when import module, all statement and definition will be executed when the import is run (once). This is why if there are any print statement in the main body of the module, they will be printed out at the import

dir( __builtin__ ) list all the names defined by python built-in. Can use dir() with any modules.

Packages - python dotted modules name. ie, provide hierarchy.

https://docs.python.org/2/tutorial/modules.html#packages

Python Language

0 is the index of the first element (like perl. unlike awk, which is 1).

[ ] = list. ordered items. think of array in most languages.,

in python, it behave somewhat like a stack. ie think push/pop.

[].append() add items (push).

in reality is is a linked-list. items can be removed from the middle of the list..

myList = [ 'a', 'b', 'c', 'c' ]

myList[1] # evals to 'b' # array syntax, 0-based index

myList[-2] # -ve wraps around, return 2nd from last item

myList[1:3] # slice

myList.append( 'e' ) # add item to list

del myList[2] # strink list

L2 = [ 'ab' ['cde', 'fgh' ] ] # nested list

len(L2) # length of list, in this eg returns 2

L2[i][j] # 2D array index for nested array.

for x in myList # items will be expanded for consecutive x

( ) = tuple, contain ordered elements. *immutable*

Strings are implemented as tuples and are immutable.

point = (x, y)

t1 = ( 2, 2 )

t2 = 2, 4, 2, 8 # () syntax is optional when there is no ambiguity

t3 = ('xy', ('abc', 'def', 'ghi') ) # nested tuples

t3[i][j] # 2D array syntax works on nested tuples as well

t2[1:3] # slide syntax works on set/tuples too

for x in t2 # items will be expanded for consecutive x

items = set( myList ) # dup 'c' will be removed

{ } = dictionary/hash. key -> value list. eg ENV[HOME] = '/nfshome/tin' # Perl: %ENV{HOME} = "/nfshome/tin"

dictionaries are mappings, not sequences.

codon[ATG] = 'lysine'

codon.keys() # or iterkeys() or both?

codon.values()

len( codon )

resultTable['species']['homo sapiens'] = 1

resultTable{ 'species' : { 'homo sapiens': 1} } # nesting, what is really happening for 2D hash above

for k in codon : # same as for k in codon.iterkeys()

print codon[k] # iteration for hash is automatically on hash key

Additional container datatypes, see

python3 collections

namedtuple()

ChainMap

OrderedDict

etc

Things that evaluate to False:

False # build-in boolean. but does not take FALSE

0

0.0 # float

0j # complex

bool( 0j ) # type-cast

"" # empty string

[] # empty list

{} # empty dictionary

() # empty set

when __len__() is 0 # eg user-defined function return 0 length list

when __bool__() is False # eg user-defined function returning false as boolean value

constants

https://docs.python.org/2/library/constants.html

True # build-in bool type, does not take TRUE.

False

None # types.NoneType a function that should return an object but just issue "return" will get None. kinda like NULL

NotImplemented

Ellipsis # used in slicing syntax

__debug__ # true if not started as python -O

* and **

http://stackoverflow.com/questions/3394835/args-and-kwargs

* eg *args # list of args

** eg **kwargs # dictionary (key, val) variable list of args

def fn1( *args ) :

enumerate( args )

# *args is for variable number of arguments

def fn2( **kwargs ) :

for (name,val) in kwargs.items() :

print( name )

# **kwargs is for variable number of named arguments

Strings

% is the new magic in python , but it is old magic. new one is {}

print( "most c-style string %s works" % stringvar )

print( "num %d, fixed point %8.2f, exponent %12e" % ( 123, 6.1234, 0.0000123 ) )

ref https://docs.python.org/2/library/string.html#format-specification-mini-language

print( "Total rows processed: {:,}".format(rows ) ) # {:,} provides thousand separators$

ref https://pyformat.info/

strings, like tuple, are immutable.

if 'abc' in StringVariable : # searching string to see if it contain a substring:

if 'abc' == StringVariable : # the two strings are the same

if Foo is Bar : # see if two objects are the same (which would then means same string, but this is OBJECT comparison!)

"" vs '' # very subtle difference that i have yet to hit. It is NOT like shell where variables are not evaluated inside ''

[] vs () # really depends if function is expecting a list or a tuple/set

Strings examples:

description = """Topspin NMR software (data processing option only)"""

"""this can be multiline string

and can serve as

comment out code

etc"""

'''here is another multiline string

that includes line break'''

Note that while multi line string can be treated as multi-line comment, the indentation matter!

the quotes must start at the right indent level of the preceding line.

if it starts flust left margin, it can breaks code

source = [ 'topspin.%s.tgz' % version ]

%s %s % (path,version)

install_cmd = [ 'tar xfzp %s/%s' % (source_urls,sources) ] ## file:/// screws up tar

sanity_check_paths = {

'files': ['bin/%s' % x for x in ['moe', 'moebatch', 'chemcompd', 'rism3d', 'sdwash']],

'dirs': [],

}

postinstallcmds = [ 'pwd', 'ls -la', 'touch TesT.txt', 'mkdir %(installdir)s/prog/curdir/wongja7', 'chgrp emv-structchem prog/curdir', 'chmod g+w prog/curdir' ]

modextravars = {

#'TOPSPIN_HOME': '/usr/prog/topspin/3.5pl2',

'TOPSPIN_HOME': '%(installdir)s',

}

toolchain = {'name': 'dummy', 'version': 'dummy'}

Globals, Module's var

Best way to make modules variables?

This maybe one way, which is what i used in taxo reporter.

Define variable at top of module, and comment that other who import it would change it?

Similar in spirit to __debug__ and __builtin__

import mydb

mydb.foo = bar

this way, bar could be set as cli args (eg parsed by argsparse and many file path can set on run time, yet have some defaults defined as the module's global var)

cross-mudule var discussion suggested:

__builtins__.foo = bar

which may seems to be done enough, but new version of python may run into conflicts.

Note __builtin__ is global counterpart that need to be import before use. python also changed this to builtin.

Python2 FAQ

recommends the creation of a global module for the project, calling it config.py or cfg.py,

put all variables there,

and have all consumer refer to it.

For a large project with multiple, cross-module references, this avoid a spagetti of "globals" in each module .py file.

OOP's use of mutator/constructor to set them isn't necessary. Just modify the var, python don't offer protection, just conventions.

Scoping rules

LEGB Rule.

Local

Enclosing function locals

Global (module)

Build-in (python)

Before changing a global var inside a fn, must first declare var as global

Python3 added a nonlocal clause

Python Scoping rules discussion

Snipplets in stand alone program

# tab nanny

python -t # display warnings

python -tt # display errors

# use SPACES in python!!

# avoid TAB, which is treated as 8 spaces.

# space is what delineate a block.

#

# code indented 4 spaces is at diff block level than those with 2 spaces !!

# also note the use of : after evaluation of condition, the else clause

if ( A < B and C < D) :

print( "and will be optimized, C < D is evaluated only if A < B is True" )

print( "python &, | are bitwise operator" )

print( "this is still part of the if-condition" )

elif ( P == Q ):

print( "string and numeric equality is tested by ==" )

elif ( P != Q ):

print( "!= can be used to test whether two objects are different" )

elif ( 1.5 < X < 4.8 or 178 > Y > 188 ):

print( "range test can be carried out as condition evaluation" )

else:

print( "final else part" )

print "this line is beyond the end of the if/elif/else block"

# note there is no brackets or endif command to delineate the block !!

eg for-loop

for x in list :

cmd1

cmd2

cmd3

use `continue` to jump to next iteration

while( X < 10 ) :

cmd1

cmd2

# logical operator

# just simple word, no all caps, no use of && || (editor will color these reserved word differently)

and

or

not

# string equality comparision using ==

txt = "abc"

if( txt == "abc" ):

print( "match" )

# import regular expression (regex) lib

import re

# this is closest to perl re search

m = re.search(r"(\w+)(Jul)(\w+)", "foo_Jul_bar")

if m : # ie execute only when a match is found

print( "YES match found" )

print( m.group(0) ) # "foo_Jul_bar", ie the whole regex match

print( m.group(1) ) # "foo_", perl's \1

print( m.group(2) ) # "Jul" , perl's \2

print( m.group(3) ) # "_bar", perl's \3

else :

print( "NO match found" )

#re.match(...) match only starting from the beginning

# get command line argument

import sys

option1 = sys.argv[1]

# argv[0] is the name of the command, eg full path of python, or script name

# example for enumeration and 2D hash

# enum functional style, need python3

# https://docs.python.org/3/library/enum.html#functional-api

from enum import Enum

RankSet = Enum( 'Rank', 'species genus family order superkingdom' )

RankSet = Enum( 'Rank', ['species', 'genus', 'family', 'order', 'class', 'phylum', 'superkingdom', 'no rank', 'NoLineageData'] )

def example2Dhash( giList ) :

# 2D dictionary is really a hash nested inside another hash

# simple usage can use a decent format.

# but initialization is pretty hairly,

# Under some circumstance may not need to init the 2D dictionary,

# but in this eg there 2D hash is evaluated before it is set

# in the line "if lineage in resultTable2[rank]:"i

# therefore init is needed (or add more test condition before the if-line).

# may really want to create a class, and go with OOP for at least this data structure...

# 2D hash ref:

# http://stackoverflow.com/questions/3817529/syntax-for-creating-a-dictionary-into-another-dictionary-in-python

# http://www.python-course.eu/python3_dictionaries.php

resultTable2 = {"species": {}}

resultTable2 = {"NoLineageData": {}}

for nom in RankSet.__members__ :

resultTable2.update( { nom: {"NoLineageData":0} } ) # seed both hash keys, may not need this complication for init sake

resultTable2.update( { nom: {} } ) # seed only first hash key

# other example of init elements of the 2D hash:

#resultTable2.update( { "NoLineageData": {"NoLineageData":0} } )

#resultTable2 = { "species": {"homo":0} }

#resultTable2.update( { "species": {}, "genus": {}, "family": {} } )

#resultTable2.update( { "species": {"HOmo":0}, "genus": {"GEnus":0}, "family": {} } )

# if did not initialize the 2D hash above, assignment below would fail.

for gi in giList:

for rank_item in RankSet:

print( rank_item.name ) # .name ref https://docs.python.org/3/library/enum.html#programmatic-access-to-enumeration-members-and-their-attributes

rank = str(rank_item.name)

lineage = getLineageByGi( gi, rank )

dbg( "%s \t %s \t %s " % (gi, rank, lineage) )

if lineage in resultTable2[rank]: # python3 changed has_key to "KEY in" python3 https://docs.python.org/3/library/2to3.html?highlight=has_key#2to3fixer-has_key

resultTable2[rank][lineage] += 1

else:

resultTable2[rank][lineage] = 1

###resultTable2.update( { rank }: {[lineage]: 1} ) # don't really need this convoluted syntax!

#for-end rank_set

#for-end gi

print( resultTable2["species"]["Aedes pseudoscutellaris reovirus"] )

Ref for Enumeration :

tech blog cover .name, .value, Enum( n ), Enum['name']

python3 standard library autonumber eg

# Auto numbering Enumeration in a class, so as to be able to define functions

# It demo some construct, but a hash maybe simpler and less overhead

# Enum was a python3 feature, thus

# in python2, need to "pip install enum34" (which is diff than enum module, out of fashion now)

from enum import Enum

class AutoNumber( Enum ) :

def __new__( cls ) :

value = len( cls.__members__ ) + 1

obj = object.__new__( cls )

obj._value_ = value

return obj

class RankSet( AutoNumber ) :

species = () # order in this list matter!!

genus = () # RankSet.__x__.name

family = () # can add other ranks in middle if desired

superkingdom = () # code expects sk to be highest

#'no rank' = () # can't do this, but new class-way of RankSet should not need this anyway

@classmethod

def getLowest( cls ) :

#(name, member) = cls.species

#return cls.species # return RankSet.species (what is needed programatically for getParent() etc

return cls( 1 ) # know that lowest rank in Enum class starts with 1

# http://www.tech-thoughts-blog.com/2013/09/first-look-at-python-enums-part-1.html

#return cls.species.name # return species # http://stackoverflow.com/questions/24487405/python-enum-getting-value-of-enum-on-string-conversion

# below will do the equivalent, but much slower

for (name, member) in cls.__members__.items() :

if member.value == 1 :

return name

#return cls.__members__

@classmethod

def getHighest( cls ) :

return cls.superkingdom # how to use value=max ??

# getParent(species)

@classmethod

def getParent( cls, rank ) :

if( rank == cls['superkingdom'] ) :

return None # return None, as no parent for sk

return cls( rank.value + 1)

# below will do the equivalent, but much slower

for (name, member) in cls.__members__.items() :

if member.value == rank.value + 1 :

return member

@classmethod

def getChild( cls, rank ) :

if( rank.value == 1 ) :

return # return None, as no child for species

return cls( rank.value - 1)

# below will do the equivalent, but much slower

for (name, member) in cls.__members__.items() :

if member.value == rank.value - 1 :

return member

# RankSet class end

# RankSet is meant to be a static class, not to be instantiated.

# support calls like these:

# RankSet.getLowest() # RankSet.species

# RankSet.getLowest().name # species

# RankSet.getParent( RankSet.getLowest() ) # RankSet.genus

# r = RankSet.getChild( RankSet.getLowest() ) # get None when "out of range"

# if r is None : # r == None works, but may break when == gets overloaded

# print( "got None from RankSet fn call..." )

# RankSet(1).value RankSet(3).names RankSet['genus'] are valid attributes

Snipplet with example of namedtuple ::

def eg_of_create_namedtuple() :

giList = { }

f = open( filename, 'r' )

for line in f:

lineList = line.split( '|' )

g = lineList[1] # python list index start at 0

GiNode = namedtuple( 'GiNode', ['Freq', 'Taxid'] )

if g not in giList :

taxid = getTaxidByGi( g )

giList[g] = GiNode( Freq=1, Taxid=taxid)

else :

#(freq, taxid) = giList[g] # this works

gin = giList[g] # but this keep to the spirit of namedTuple as an entity

giList[g] = GiNode( gin.Freq+1, gin.Taxid )

f.close()

return giList

def egConsumer_of_namedtuple( giList ) :

for g in giList :

print( "looking at gi:%s \t with freq: %s \t and taxid=%s" % (g, giList[g].Freq, giList[g].Taxid) )

if giList[g].Taxid not in resultTable4[currentRank] :

# the "key" to the namedtuple is available here even when it is not defined here

parentTaxid = getParentByTaxid( giList[g].Taxid )

rankName = getLineageByTaxid( giList[g].Taxid, currentRank )

node = TaxoNode( parentTaxid, rankName, giList[g].Freq, giList[g].Taxid )

resultTable4[lowestRank][giList[g].Taxid] = node

# reading text file

f = open( filename, 'r' )

print f # print whole file

for line in f:

print line$

lineList = line.split( ',' )

f.close()$

# write to file

outFH = open( outfile, 'w' )

outFH.write( "typical write method\n" )

print( "print redirect write method, need to add 'from __future__ import print_function' in python2 to work" , file = outFH )

displayText = '{0: ^50}'.format( entry )

print( "%5d \t %8.4f %% \t %s" % intNum, floatNum, stringVar , file = outFH )

outFH.close()

Sort

The

python Sort HowTo is a concise read on how to sort iterables by specifying which field to use as key.

__repr__ ...

mylist.sort() sorts in-place, so save space and slightly faster. return None.

sorted(mylist) returns a new sorted list, so a tad slower, but said to be not too significant.

By default, Python use timsort, an optmized mergesort. It is heavily optimized on sorted input and can return as fast as o(N-1). Typical performance is lg(N!).

More info at

http://stackoverflow.com/questions/1436962/python-sort-method-on-list-vs-builtin-sorted-function

...

Functional Programming in python

Python supports imperative(procedural), OOP, as well as functional style. Since it is not dictated/required, hybrid approach is possible. A few observations:

- avoiding side effects (core of functional programming) may not always be possible. eg. printing message to screen, writting to file.

- functional programming center on being stateless. easier to achieve for a function with specific input and produce output deterministically. but the inside of the funciton may need to be stateful for more complex tasks.

Well, sorting is complex, can be done procedurally (bubble sort) but can also done by divide and conquer without state, as can be done via merge sort.

- focusing on stateless, functional programming is in this sense at opposite spectrum of OOP, which is object with methods to provide internal state change.

The following slide from my colleague Wes provide the gist of FP in Python:

lambda functions: create anonymous functions

addFive = lambda x: x + 5

addFive(8) # result: 13

map()

map(func, sequence) # Applies func() to every element of sequence.

filter()

filter(func, sequence) # Returns elements where func() returns True.

reduce()

reduce(func, sequence) # Reduces a list to a single value.

sum = reduce(lambda x,y: (x+y), [1,2,3]) # result: 6

list comprehensions: syntactic sugar, clearer than map() or filter()

[x.upper() for x in seq] vs. map(lambda x: x.upper(), seq)

[x for x in seq if x > 0] vs. filter(lambda x: x > 0, seq)

collect() # Return a list of all elements

maybe useful books...

http://www.amazon.com/Guide-Functional-Python-Comprehension-Constructs-ebook/dp/B00CUZDOSI/ref=tmm_kin_swatch_0?_encoding=UTF8&qid=1453132773&sr=8-8

concise intro to functional programming. likely using python as construct.

about 45 pages. maybe better than some web stuff?

talks about lambda fn, map/reduce/filter, then go into recursion, comprehension, generators.

the above is Section III of Treading on Python Volume 2: Intermediate Python

(seems like I don't like either).

http://www.amazon.com/Functional-Python-Programming-Steven-Lott/dp/1784396990/ref=sr_1_1?ie=UTF8&qid=1453132773&sr=8-1&keywords=functional+programming+python

start out with procedural/functional hybrid.

maybe easier to follow to get more functional code into programs.

some 330 pages. dive into many specific of iter(), where they are used, etc.

worthwhile if start programming a lot in python.

PS. LISP is the early functional language. code was pretty hard to read. Erlang is more modern. CouchDB is coded in Erlang.

iterators

even if don't really want to get fully functional, understanding iterator goes a long way in understanding many procedural constructs.

eg. for X in Y is really for X in iter(Y)

list and dictionaries are iterable.

dictionaries especially!

m = { 'jan', 1, 'feb', 2, 'mar', 3 }

for key in m: # same as for key in iter(m)

print key, m[key]

# side note: python2 allowed

# if m.has_key( k )

# the has_key() is no longer avail in python3

# so use the syntax of

# if k in m

iter( m ) # create iterator from dictionary. see https://docs.python.org/3/library/stdtypes.html#typesmapping

m.iterkeys()

m.itervalues()

m.items() # python2 use m.iteritems()

in 2D dictionaries... ??

table = { 'species', { 'HBV', 13, 'BK', 28, 'HIV', 14 },

'genus', { 'H', 27, 'B', 28 }

'family', { 'tot', 55 }

}

ranks = table.iterkeys()

familySum = table[genus].itervalues()

table.iteritems() ??

table.iter()

there are the

iter()

tuple()

that help understand list/tuple generations/conversion.

Ref:

Python 2 - Functional - iterators

Generator and Comprehension

This is probably key to wrap head around functional programming.

() is for generator ... return iterator

[] is for function ... return list

the content inside the parenthesis and brackets will tell it is not tuple or dictionary/hash

( obj.count for obj in list_all_objects() )

Ref:

Python 2 - Functional - generator...

Object Oriented Programing in Python

OOP, especially data structure with functions to modify its state, is like the

opposite of Functional Programming.

GUI are probably natual with OOP,

but biz logics probably better with FP, and Procedural approach good enough.

Python modules provides encapsulation and separation, yielding some benefits of OOP w/o the altered logic imposed by classes.

see

http://docs.python-guide.org/en/latest/writing/structure/

class myClass(parentClass1, parentClass2) :

classwideVar = "this is shared by all object/instances of this class. " # be careful with this, not like Java!

def __init__( self )

instanceVar = "this is instance specific"

def fn( self ) :

print( "hello world" )

# super() refer to parent class

parentClass can be blank if not inheriting anything. this is defined in the class clause, obj declaration need not state anything here.

standard data type can be used for parentClass. eg object, Enum,

multiple parent classes can be listed, (comma?) delimited.

x = myClass()

xf = x.fn # this is valid! a method name is an attribute of the class... this define an alias to the function...

xf() # actually calls x.f()

myClass.fn(x) # this is what is happeneing when calling x.fn(), which is why first param of fn is called self.

Data attributes override method attributes with the same name !!

Use some standard to avoid bugs, eg verbs for methods, nouns for data.

well, Java says data should be private and accessed via methods provided by the class...

class Pizza(object):

shape = "round" # ie, all pizzas will have the same shape.

favoriteIngredient = "pepperoni"

def __init__(self, ingredients):

self.ingredients = ingredients # variables comes to live when they are first executed

@classmethod # define a class-static method, ie not variable by object instantiation

def getFavoriteIngredient( cls ):

return cls.favoriteIngredient

p = Pizza( "pineapple" )

print( p.ingredients ) # attributes in python are "public" in the C++/Java nomenclature

# nothing in python enfoce data hiding, it is all done by convention!!

print( Pizza.shape )

# class is object too in python!

# Exceptions are ... ??

@staticmethod

@classmethod

@abstractmethod

nonlocal

global

ref:

static class in python tutorial

Python 3 tutorial on classes

DataFrame (Pandas), DataSeries

DataFrame is essentially a table (2D).

Operations (methods) work on all elements of a given column. so avoid having to write iterative loops.

DataSeries is a different data structure and has different methods.

These are horizontal? But not exactly 1D?

import pandas as pd

unemployment = pd.read_csv("data.csv")

myTable.to_csv("path/result.csv") # save result, export to csv

slices (return another dataframe) vs loc/iloc (return a data series)

pretty confusing here.

also, does it mutate the object (dataframe) like method would?

or just return a new data frame that is diplayed by jupyter notebook, but otherwise discarded if not saved to a new dataframe.

Note that for loc, ending index is NOT included. But it is included in iloc. !!

[...] is for slicing

[[...]] ??

merge.

Used to join two dataframes.

This is essentially a JOIN in datababase parlance.

Left/Right inner/outter applies, which may generate really strange looking tables. RTFM.

inplace=True # edit table in place

inplace=False # good for transient display? don't save into existing table, saving need assignment to new table

unemployment = unemployment.drop(... , inplace=True, ... ) # drop column

dropna() # drop (rows?) with missing value.

unemployment['en_name'].unique() # return unique country names

unemployment['en_name'].nunique() # think of count( ...unique() )

unemployment['unemployment_rate'].isnull().sum() # give a count of number of rows where column unemployment_rate is null (ie missing data).

.reset_index(drop=True, inplace=True) # eg before plotting, good to reset the row index if done work to remove data.

index usually used as x values in plots, thus sequential indexing would be nice (or else get gap?)

pd.to_datetime('1868/3/23') # in yyyy/m/dd format!! :)

pd.to_datetime('3/23/1868', format='%m/%d/%Y') # specify format

return a timestamp object.

GroupBy

unemployment.groupby('name')['unemployment_rate_null'].sum()

Ref:

Berkeley D-Lab

Introduction to pandas

PySpark

PySpar, Python, SparkSQL and submitting job to a Cloudera YARN cluster

(More info about these technology in the BigData page.

from pyspark import SparkContext

from pyspark.sql import SQLContext, Row

from pyspark.sql.types import *

def main() :

sc = SparkContext( appName='pyspark_yarn_app' )

#sc = SparkContext( 'local', 'pyspark_local_app' )

sqlContext = SQLContext(sc)

lines = sc.textFile("ncbi.taxo.dump.csv")

parts = lines.map(lambda l: l.split("\t"))

acc_taxid = parts.map(lambda p: (p[0], p[1].strip(), p[2].strip(), p[3].strip() ))

schemaString = "acc acc_ver taxid gi"

fields = [StructField(field_name, StringType(), True) for field_name in schemaString.split()]

schema = StructType(fields)

schemaAccTaxid = sqlContext.createDataFrame(acc_taxid,schema)

schemaAccTaxid.registerTempTable("acc_taxid")

sqlResult = sqlContext.sql( "SELECT taxid from acc_taxid WHERE acc_ver = 'T02634.1' " ) # sparkSQL does NOT allow for

myList = sqlResult.collect() # need .collect() to consolidate result into "Row"

print( myList[0].taxid ) # taxid is the name of the column specified in select

# note that std out is typically mixed with many hadoop job output, best to print to a file

# main()-end

# ref: http://stackoverflow.com/questions/24996302/setting-sparkcontext-for-pyspark

To submit to cluster, run spark-submit from the command line, depending on whether you want to be very specific on job parameters:

spark-submit --master yarn --deploy-mode cluster my_spark_app.py

spark-submit --master yarn --deploy-mode cluster --driver-memory 8G --executor-memory 16G --total-executor-cores 32 my_spark_app.py

If the python program (app) resides in HDFS, then it can be specified as

spark-submit --master yarn --deploy-mode cluster "hdfs:///user/tin/my_spark_app.py"

YARN creates quite a number of wrapping layers, so many standard output and std err get lost. to see those, it is better to run in local mode instead of cluster mode. use one of:

spark-submit --master local my_spark_app.py

spark-submit --master local[4] my_spark_app.py

pyspark my_spark_app.py

Common location to hunt for spark-submit and pyspark:

/usr/bin/pyspark

/usr/lib/spark/bin/pyspark

/usr/bin/spark-submit

IMHO, it is best to specify the job parameter in the command line as arguments to spark-submit.

However, they can be coded in the python app itself by putting the arguments in the SparkContext, see code below for example.

The settings defined in the python code trump cli argument for spark-submit.

from pyspark import SparkContext, SparkConf

from pyspark.sql import SQLContext, Row

from pyspark.sql.types import *

def main() :

conf = SparkConf()

conf.set( "spark.app.name", "spark_app")

#conf.set( "spark.master", "local" )

conf.set( "spark.master", "yarn" )

conf.set( "spark.submit.deployMode", "cluster" )

conf.set( "spark.eventLog.enabled", True )

conf.set( "spark.eventLog.dir", "file:///home/tin/spark" )

sc = SparkContext( conf=conf ) # conf= is needed for spark 1.5 and older

sqlContext = SQLContext(sc)

sqlContext = SQLContext(sc)

## http://stackoverflow.com/questions/24996302/setting-sparkcontext-for-pyspark

## https://spark.apache.org/docs/1.5.0/configuration.html

Parallel Programming in python

- Python Global Interpreter Lock (GIL) enforces only 1 python instruction is run at a time, thus pythong program cannot be multi-threaded. GIL release lock every 5 ms so OS scheduler can schedule other threads.

NOTE: multiple process are completely independent (ie they have their own GIL).

- Network IO function typically release the GIL while they xfer data

- Threads are still avail from threading import Thread, Event but suitable mostly for doing async io stuff. Dealing with the GIL in the current implementation is hard to yield high perf parallel code

- Numpy?SciPy, zlib, bz2, and many high perf math libs are natively parallel due to their native implementation in C. The Python interface to them release the GIL while running.

- Parallelization for AI work: TensorFlow and PyTorch (SciKit-Learn?) are implemented in C++ as python extension, and code there does not depends on the GIL either. multi-core CPU and GPU code works fine in this space.

- PySpark, but have to use the hardoom/spark framework

- mpi4py, async parallel paradigm of MPI

- Child process based approach: Process and Pool Class: import multiprocessing. Cuz GIL, this tends to be higher performance. But there are overhead of inter-process communication: serialize-deserialize, (if fork()-based, then child share parent memory/data?

-

Fluent Python. Ch 20: Concurrency Models in Python

https://learning.oreilly.com/library/view/fluent-python-2nd/9781492056348/ch20.html

Concurrency is about keeping track of many things that are happening at the same time, structure is needed to keep track of this. However, solution of this may not always be parallelizable. Parallelism deals with execution.

process share memory via pipe, which are raw bytes, so can be between diff languages

threads are within the same program, thus they share memory, thus language, data structure format, much easier to code for simpler tasks such as array sharing.

Dask

Dask is a parallel lib can farm out to a cluster of machines (think HPC).

Offers API with routine that resemble (but not identical?) to NumPy, Pandas and Scikit-Learn.

For large parallel program, especially at the start, Dask would be a good platform.

Dask has a scheduler, while one can run in laptop, on HPC it need to invoke the pieces that tie as batch jobs.

On user end, the dask/python code need to install the dask-jobqueue library.

Write a declaration on how big the dask cluster job will be, and also write slurm job submit script requesting the desired resource (number of nodes, running time, etc). Slurm would just run the job like any multi-node job.

ref:

https://jobqueue.dask.org/en/latest/examples.html#slurm-deployment-providing-additional-arguments-to-the-dask-workers

Dash with Jupyther lab:

SSH Tunneling was used to use web browser on laptop tunnel to cluster.

Maybe can use OOD to circumvent same network requirements.

Interactive data analysis using Dask and HPC is possible, but heterogenous node job scheduling needs more work.

See: https://blog.dask.org/2019/06/12/dask-on-hpc

see: https://dask.org/

concurrent.futures

asyncio

About me!

My name is Gig Ou T. You know I am a bot cuz my jokes are always 20/10!

As a hispanic working for The State, I am sure you understand.

My best friends are Bard and Alexa, though since they started dating, they

have much less time to chat with me these days.

Instead, I am forced to play with Duoloingo. It isn't too bad, my favorite

languages spanglish and bash is turn out to be very noun.

Python vs your favorite language

As explained by the folks at

toggl

(Yes, Python is a the real thing! -- well, so is Perl :)

nSarCoV2

hoti1

bofh1